Transitioning NATS Encoding to MessagePack

Microservices communication is a critical aspect of modern software architecture. While there are several encoding options like JSON and Protocol Buffers, the key is ensuring both sides use the same encoding to avoid unnecessary conversions. In our Node.js applications, we faced a significant challenge with encoding in NATS, a lightweight and fast messaging system employing a publish/subscribe model.

The Problem with JSON in NATS

Using NATS.js, our applications serialized large JavaScript objects into JSON strings for network transmission. JSON, while versatile, is text-based and inefficient for high-throughput applications. We dealt with payloads ranging from 500KB to 5MB, resulting in gigabytes per second of network traffic. This inefficiency led to unacceptable latencies up to 5 seconds, far exceeding our 300ms target.

Why Not HTTP?

HTTP, though popular, is ill-suited for real-time communication due to its request/response nature, making it a less viable choice for our needs of handling thousands of messages per second.

Exploring Solutions

Reducing payload sizes was an initial strategy, but it proved insufficient due to architectural constraints and potential cost increases. After evaluating various alternatives, we decided to transition to a more efficient encoding method suitable for our high-volume, real-time requirements.

Why MessagePack?

In our mission for a more efficient encoding method, I discovered MessagePack, a binary encoding compatible with NATS. MessagePack is language-agnostic, offering seamless integration without requiring code changes. This compatibility was crucial as our existing system was heavily reliant on JSON.

Performance Comparison: MessagePack vs. JSON

| Operation | Operations | Time (ms) | op/s |

|---|---|---|---|

| JSON Encode | 81,600 | 5,002 | 16,313 |

| JSON Decode | 90,700 | 5,004 | 18,125 |

| MessagePack Encode | 169,700 | 5,000 | 33,940 |

| MessagePack Decode | 109,700 | 5,003 | 21,926 |

| MessagePack Encode w/ Shared Structures | 190,400 | 5,001 | 38,072 |

| MessagePack Decode w/ Shared Structures | 422,900 | 5,000 | 84,580 |

The table illustrates MessagePack's superior performance. Encoding and decoding speeds with MessagePack are significantly faster, especially when using shared structures.

Shared Structures: A Game Changer

Shared structures in MessagePack optimize encoding and decoding by exploiting similarities in message patterns (such as the same keys on an object). This approach mirrors Protobufs, but MessagePack does this without predefined schemas, using heuristics to identify patterns.

Why MessagePack Outperforms JSON

- Binary vs. Text: Binary formats are generally more compact and faster to process because they don't require parsing and interpreting human-readable text. This compactness reduces the amount of data that needs to be transmitted over the network, leading to faster data transfer speeds

- Reduced Overhead: MessagePack's syntax has less overhead compared to the verbose nature of JSON (like braces, commas, and quotes). MessagePack uses fewer bytes to delimit data, which results in less overhead and smaller payloads.

- Efficient Serialization/Deserialization: MessagePack doesn't require parsing a string or converting data types from string representations. In binary formats, data types are preserved, which means there's no need to convert, for example, a number from a string back to a numeric type

- Shared Structures: MessagePack optimizes the encoding and decoding process by recognizing and reusing repeated patterns. This significantly reduces the redundancy in the data, further enhancing the speed of operations. This is particularly beneficial for our use case, where you have high volumes of messages with similar structures.

What does this look like in production?

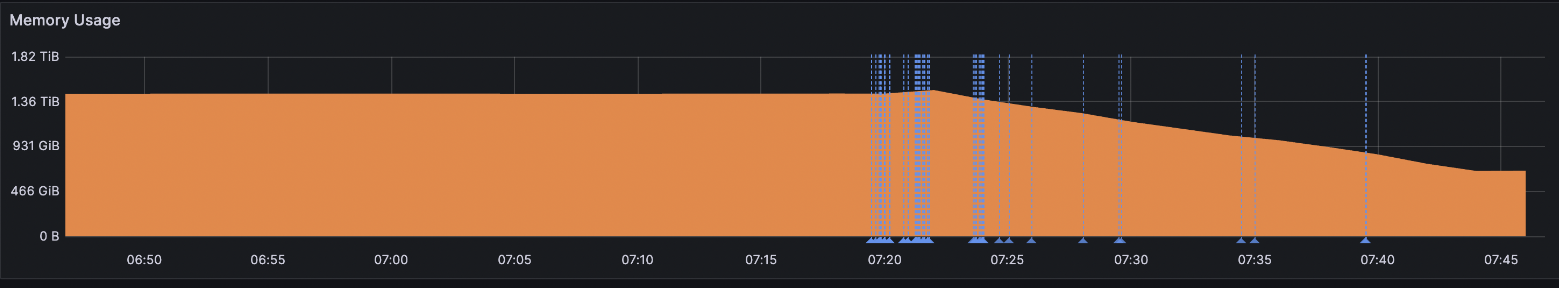

Memory Usage Efficiency

Before MessagePack: 1.4TiB of memory

After MessagePack: ~680GiB of memory

That's nearly a 52% reduction in memory usage!

Okay then, what about the size of the messages?

MessagePack's efficiency is also evident in its handling of message sizes:

| Message Type | Size (bytes) |

|---|---|

| JSON | 105,770 |

| MessagePack | 88,684 |

With MessagePack, we observed a 17% reduction in message size. This reduction is crucial in high-throughput environments, enabling the transmission of more messages per second by reducing the amount of data that NATS carries over the network.

Community Consensus

While this new technique isn't widely known, benchmarks and discussions echo our findings. Although some argue the gains may not justify the transition in every scenario, in cases of large payloads and high throughput, these “minuscule” gains are exponentially significant.

What does this look like in code?

The switch from JSON to MessagePack was straightforward. Here's a side-by-side comparison to highlight the changes:

Original JSON Encoding/Decoding

// Producer using JSON Codec const jsonCodec = nats.JSONCodec(); const payload = { type: 'message', data: 'Hello, world!', timestamp: Date.now() }; const encodedPayload = jsonCodec.encode(payload); nats.publish('<TOPIC>', encodedPayload);

// Consumer using JSON Codec const jsonCodec = nats.JSONCodec(); nats.subscribe('<TOPIC>', (err, msg) => { if (err) console.error(err); else console.log('Received:', jsonCodec.decode(msg.data)); });

New MessagePack Encoding/Decoding:

// Producer using MessagePack Codec const { pack } = require('msgpackr'); const payload = { type: 'message', data: 'Hello, world!', timestamp: Date.now() }; const encodedPayload = pack(payload); nats.publish('<TOPIC>', encodedPayload);

// Consumer using MessagePack Codec const { unpack } = require('msgpackr'); nats.subscribe('<TOPIC>', (err, msg) => { if (err) console.error(err); else console.log('Received:', unpack(msg.data)); });

Its implementation being this simple allowed us to make the transition easily. Just plug and play!

Other Applications of MessagePack: Redis Caching

Encouraged by our success with NATS, we extended the use of MessagePack to our Redis caching system. To further optimize, we incorporated zlib compression. Unlike with NATS, speed wasn't the primary concern for caching; rather, the focus was on reducing data size.

Redis Optimization

| Condition | CPU Time (ms) |

|---|---|

| Before MessagePack+zlib | 924.4 |

| After MessagePack+zlib | 11.5 |

Note: This table only represents the deserialization process, which was faster due to the smaller data size.

The result was staggering: a 99.26% reduction in processing time. This improvement was a direct consequence of the smaller data size afforded by MessagePack and zlib compression.

Lessons Learned and Improvements

While the outcome was positive, there were areas where our approach could have been more robust:

- Monitoring Metrics: A more comprehensive tracking of metrics, specifically around the CPU usage for encoding and decoding, would have provided deeper insights.

- Staging Environment Testing: Conducting extensive benchmarks and stress tests in a staging environment could have offered a more thorough understanding of the performance impacts.

Vocabulary

-

Encoding/Serializing: The process of converting data into a format that can be easily transmitted or stored. For example, turning a JavaScript object into a JSON string.

-

Decoding/Deserializing: The process of converting encoded data back into its original format. For example, converting a JSON string back into a JavaScript object.

-

Codec: Short for coder-decoder. It's a software that can encode data into a specific format and decode data from that format. For example, a JSON codec can convert data to and from JSON.

-

Protocol: A set of rules and conventions for communication between network devices. It defines how data is formatted and transmitted, as well as how to respond to various commands. Examples include HTTP, TCP/IP, and NATS.

-

Message: In network communications, a message is a unit of data exchanged between systems. It often includes metadata like headers or identifiers to manage the communication process.

-

Payload: The core data that is being transmitted in a message. In the context of network communications, it's the actual information (excluding headers or metadata) sent from sender to receiver.

-

Microservice: An architectural style that structures an application as a collection of loosely coupled services. Each microservice is a small, independent process that performs a specific function.

-

Throughput: The amount of data processed or transmitted in a given amount of time. In network communication, it often refers to the number of messages or the amount of data a system can handle per second.

-

Latency: The time it takes for a message to travel from the sender to the receiver. In network communications, lower latency means faster message delivery.

-

Binary Encoding: A method of data representation in which data is encoded in binary format (0s and 1s). It's generally more compact and faster to process than text-based encodings like JSON.

-

MessagePack: A binary serialization format that's more efficient than text-based formats like JSON. It's used for encoding complex data structures in a compact and fast manner.

-

Shared Structures: A technique in data encoding where common patterns in data structures are identified and reused, reducing redundancy and improving efficiency in data encoding and decoding.

-

zlib: A software library used for data compression. zlib can compress and decompress data, making it useful for reducing the size of data transmitted over networks or stored in databases.

-

Real-time Communication: A type of communication where information is transmitted instantly with minimal delay, allowing for immediate reception and response. It's crucial in systems that require up-to-date data exchange, like chat applications or stock trading systems.

-

Scalability: The ability of a system, network, or process to handle a growing amount of work or to be expanded to accommodate that growth. It's an important consideration in designing systems that need to handle increasing loads over time.